GitOps mult-cloud namespace scoped

My usual tasks include giving presentations and workshops to customers on middleware topics. One of my favorite topics is GitOps, for which I have also created my own demos. Here at Red Hat there is a very nice demo that I used as a basis, the quinoa wind turbine race which was created by my colleague Kevin Dubois. To learn Gitops I worked through two free downloadable ebooks, the two here:

Intro: GitOps Demo

I forked the application code repository and created a second repository for the manifest files (= the YAMLs). I created a helm chart with everything you need for a complete deployment in one namespace, including a kafka, pipelines and many more. On top of that I created an ApplicationSet (that you should use today instead of the AppOfApps pattern to avoid deadlocks) and have a shell script that installs everything you need.

To install the application, you have 2 repos:

- https://github.com/gmodzelewski/quinoa-wind-turbine

- https://github.com/gmodzelewski/quinoa-wind-turbine-manifests

The latter includes an installation script (and also an uninstall script) which can install everything you need. Just run sh 01-install.sh and you are good to go.

To demo the application I run through these steps:

- Login on the dashboard of the game (username: developer, password: password)

- Play the game, on mobile phones

- Show the application code

- Open code in Dev Spaces

- Change a feature flag

- Show the running pipelines build

- Show the result and

- Play the game again

Now to the new updated stuff I wanted to write about:

Fancy and new

I heard from a customer that they only ever provide their customers with one or more namespaces, but none of their customers get administrative rights on the cluster. Only at the namespace level. In addition, there are a large number of clusters (>42) and customers also only have access to the namespaces. So, for example, as an application developer you would have access to >42*3 namespaces with the names gitops-demo-dev, gitops-demo-stage and gitops-demo-prod. The challenge that arises from this: How can this be deployed as automatically as possible?

Of course, I would also recommend the application set and wanted to expand my demo accordingly. So there are 2 issues/features that need to be developed:

- roll out in multiple clusters

- roll out with namespace rights

- ensure that an user in a group sees only the needed things and nothing more

1. Roll out in multiple clusters

This is super easy. I didn’t have to do much but to create a new stage, I named devRosa (because I deployed on a Red Hat OpenShift Service on AWS, our managed OpenShift solution for AWS) and set a cluster url (for example: https://api.rosa-xxx.xxx.p1.openshiftapps.com:6443). I also had to connect both ArgoCD instances on the two clusters, according to the docs via argocd cli with a statement like that:

export ARGOCD_URL=$(oc get routes openshift-gitops-server -n openshift-gitops -o jsonpath='{.status.ingress[0].host}')

argocd cluster add openshift-gitops/api-rosa-xxx-xxx-p1-openshiftapps-com:6443/cluster-admin — server ${ARGOCD_URL}

And that’s it. Cluster wide deployments worked like a glove. The more challenging thing was the second thing.

2. Roll out with namespace rights

The first challenge was to deploy the ApplicationSet in the own custom namespace and let the ArgoCD instance see it. By default the argocd instance is deployed with as low rights as needed, which means the ApplicationSet was deployed in my own namespace (gitops-demo-dev) but nothing happened. The argocd instance did not show errors or events, nothing.

A colleague recommended me to have a talk with another Red Hatter named Carlos Lopez Bartolome who is somehing like a GitOps genius here. I talked with him about the use case and what fails, and he helped me solve the problem.

The issue here is that argocd uses several service accounts for dedicated tasks covering different objects. If you do a

oc get sa -n openshift-gitops | grep openshift-gitops

You will see that there are several Service Accounts covering different aspects of the workflow. On OpenShift you can check for RBAC permissions with the oc auth can-i command, such as:

oc auth can-i get applicationsets.argoproj.io -n gitops-demo-dev --as system:serviceaccount:openshift-gitops:openshift-gitops-applicationset-controller

As you can see, several service accounts have to get several explicit rights. For my demo I already gave the application controller service controller cluster-administrative rights, so I just added cluster-admin rights to the other service accounts:

oc adm policy add-cluster-role-to-user cluster-admin -z openshift-gitops-argocd-application-controller -n openshift-gitops

oc adm policy add-cluster-role-to-user cluster-admin -z openshift-gitops-applicationset-controller -n openshift-gitops

oc adm policy add-cluster-role-to-user cluster-admin -z openshift-gitops-argocd-server -n openshift-gitops

In case you ask: Wouldn’t it be better to just give minimal rights to these accounts? Of course. Pull requests are welcome. But these service accounts should be able to create all kinds of objects in all kinds of user namespaces so I didn’t spend the time to solve that.

3. ensure that an user in a group sees only the needed things and nothing more

My OpenShift cluster authentication and authorization is backed by a Red Hat Build of keycloak instance. That means I use users and groups that I manage in my keycloak instance for OpenShift.

I created a group named gitops-demo-developers and created 2 users, Yessica and Nora, and added yessica to the group. The expectation is:

- Yessica should be able to see the gitops-demo-dev|stage|prod namespaces and the deployments in the argocd instance.

- Nora doesn’t.

To solve that Carlos recommended to use ArgoCD AppProjects. This is used to restrict group access to certain applications that are set up in advance.

That is why creating an AppProject is the task of a cluster admin and must be done in the openshift-gitops namespace. That seemed logical to me and I added it to my ApplicationSet (=wind-turbine-race.yaml):

apiVersion: argoproj.io/v1alpha1

kind: AppProject

metadata:

name: quinoa-wind-turbine-race

namespace: openshift-gitops

spec:

sourceNamespaces:

- gitops-demo-*

clusterResourceWhitelist:

- group: '*'

kind: '*'

destinations:

- namespace: 'gitops-demo-*'

server: '*'

sourceRepos:

- '*'

roles:

- name: admin

description: Privileges to quinoa-wind-turbine-race

policies:

- p, proj:quinoa-wind-turbine-race:admin, applications, get, quinoa-wind-turbine-race/*, allow

groups:

- cluster-admin

- gitops-demo-developers

ArgoCD now got the information that only gitops-demo-developers are able to see this project and deploy things in gitops-demo-* namespaces.

To check that, I logged in as Yessica and was able to see the deployments as expected. I also logged in as Nora and didn’t see anything at all. That worked!

Learnings

- namespace scoped deployments need previously performed tasks by cluster admins for management reasons

- cluster admins responsibilities:

- establish connection between argocd cluster instances

- give ArgoCD ServiceAccounts permission to do stuff in the cluster (in my case: cluster admin rights, smaller restricted permissions desired)

- deploy AppProjects and assign groups

Kafka Q&A

I had a very interesting discussion with a lot of participants about our AMQ Streams for Kafka product. Here are the more interesting questions and answers collected in a tl;dr summary:

- Do we have recommendations for monitoring?

- We even have an application for that: Kafka Console. Installable via OperatorHub, see the docs

- Guide to connect Kafka Console to a kafka cluster

- What is the vision with KRaft?

- It is a big topic and often mentioned in the roadmap

- Example deployment

- Do we have example deployments for MirrorMaker2?

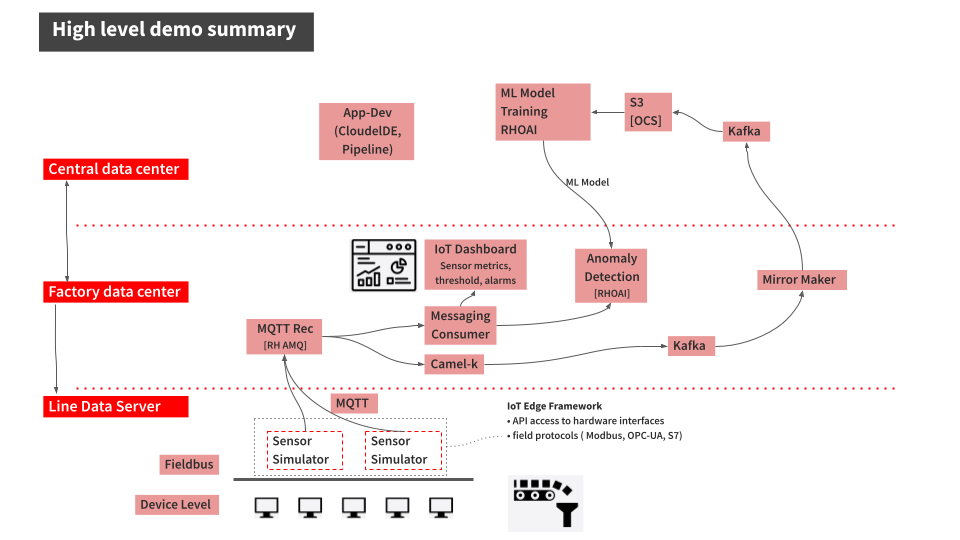

- Shred the industrial edge validated pattern

- MQTT Rec path to Kafka: Camel K translates messages to the kafka protocol, puts messages to a local edge kafka, messages are batch processed to a bigger compute resource kafka instance where complicated processing tasks can be performed.

- Main reason for that pattern in the path: More compute resources in central data center

- Do we have some solution patterns/guides for event streaming use cases where a kafak is involved?

- Yes, a lot. Recently I stumbled upon this link

- What is the recommended solution for a schema registry?

- In our Application Foundations Bundle we have an application called Apicurio. This is a schema registry and usable with for example Avro Schemas.

- Deploy example here

- Apicurio Docs